Showing posts with label Internet Explorer. Show all posts

Showing posts with label Internet Explorer. Show all posts

Resuming Partial Downloads

Suppose you started to download a big file like an ISO image or a movie. What if after a considerable amount is downloaded your connection got lost or your browser got crashed, if you try to download the file again it will start from scratch. It is not a big problem with small files or if you downloaded only a small amount of the file in the first place but if you downloaded something like over 90% you certainly do not want to start from scratch all over again.

Luckily there is a solution for that problem:

1- Download wget from here. It's the default download manager in Linux, but you can also use it on Windows.

2- Create a backup of your partial download, if you want.

3- Place wget and your file in same folder. (you can also specify the download folder in command line if you want like: wget c:\folder\partialfile.iso)

4- Open command prompt and navigate to the your folder.

5- Type:

Internet Explorer will add an extra extension to your partial download. You need to delete that part from the file name.

-O: if you want to save a file with a different name, you can add -O

wget -O ubuntu.iso -c http://www.example.com/partialfile.iso

--limit-rate: for setting a speed limit to your download

wget --limit-rate=400k -c http://www.example.com/partialfile.iso

-i: download multiple files. You can add all your download urls as separate files in a text file and use this file in one go instead of initiating a new command for every file.

wget -i textfile.txt

-r-A: Download every specific files from a website. For example if you want to download all the pdfs:

wget -r -A.pdf http://www.example.com

ftp-url: If the file you want to download is on an ftp server (you may not need to specify this, but just in case if you are experiencing problems) you should type:

wget ftp-url ftp://example.com

Luckily there is a solution for that problem:

1- Download wget from here. It's the default download manager in Linux, but you can also use it on Windows.

2- Create a backup of your partial download, if you want.

3- Place wget and your file in same folder. (you can also specify the download folder in command line if you want like: wget c:\folder\partialfile.iso)

4- Open command prompt and navigate to the your folder.

5- Type:

wget –c http://www.example.com/partialfile.iso-c operation is for checking if there is a partial download in the folder and how much is downloaded. For fresh downloads you don't need to add it.

Internet Explorer will add an extra extension to your partial download. You need to delete that part from the file name.

partialfile.iso.snhkgvz.partialGoogle Chrome adds .crdownload to file name

partialfile.iso.crdownloadadvanced options:

-O: if you want to save a file with a different name, you can add -O

wget -O ubuntu.iso -c http://www.example.com/partialfile.iso

--limit-rate: for setting a speed limit to your download

wget --limit-rate=400k -c http://www.example.com/partialfile.iso

-i: download multiple files. You can add all your download urls as separate files in a text file and use this file in one go instead of initiating a new command for every file.

wget -i textfile.txt

-r-A: Download every specific files from a website. For example if you want to download all the pdfs:

wget -r -A.pdf http://www.example.com

ftp-url: If the file you want to download is on an ftp server (you may not need to specify this, but just in case if you are experiencing problems) you should type:

wget ftp-url ftp://example.com

Internet Explorer Prints Blank Pages

file:///C:/Users/Username/AppData/Local/Temp/xsadd.htmI looked at the temp folder (Win+R, %TEMP%) and found that file named xsadd.htm was there. So there was nothing wrong with the file. After a bit of research I found out that some drive cleaning programs (CCleaner, SlimComputer etc) are deleting the necessary "low" folder in temp directory where IE creates temporary files for the printing job.

For a quick fix for this problem I tried creating the "low" folder myself and then change its permissions, and this solved my problem.

To change the permissions fire up the command prompt with administrative rights (win+r / cmd) and type:

icacls C:\Users\Username\AppData\Local\Temp /setintegritylevel (OI)(CI)lowDirectory structure may not be the same for you. So look at the footer of your blank page.

There is also a Microsoft Fix it solution for this problem.

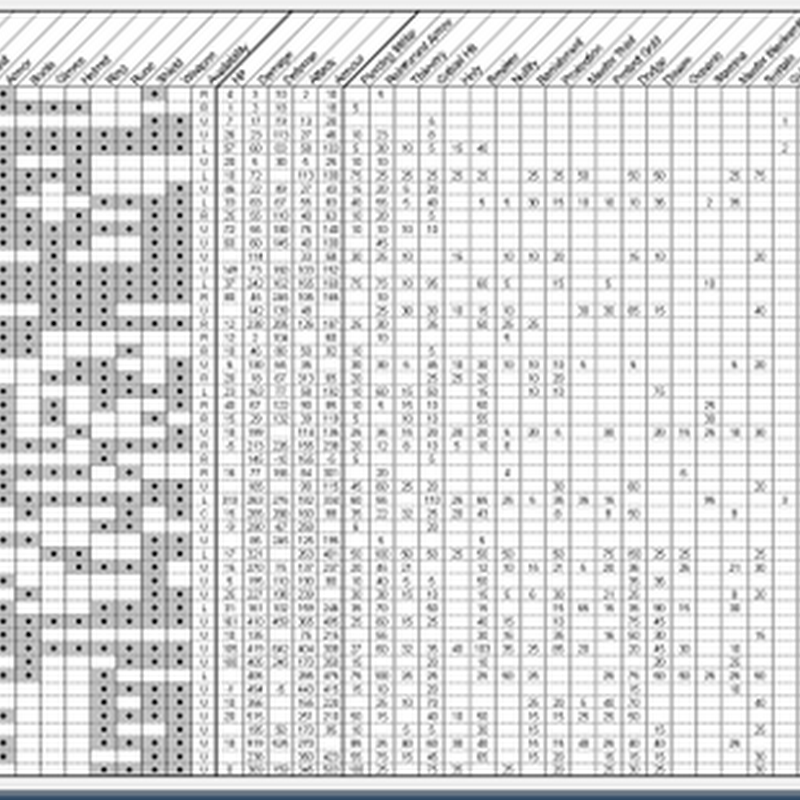

War of the Browsers

Here is the most popular browser,Firefox. It's like the former MySpace when it was more popular than Facebook.

Here is Opera, has everything one would need for surfing by default without the need for add-ons.

from: myconfinedspace

Here is Opera, has everything one would need for surfing by default without the need for add-ons.

from: myconfinedspace

Subscribe to:

Posts

(

Atom

)